In Part 1 you learnt how to deliver video content with Nutanix Cloud Native stack. In this part 2 you will experience how to prepare, encode or transcode, a video source into several resolutions or formats for delivery.

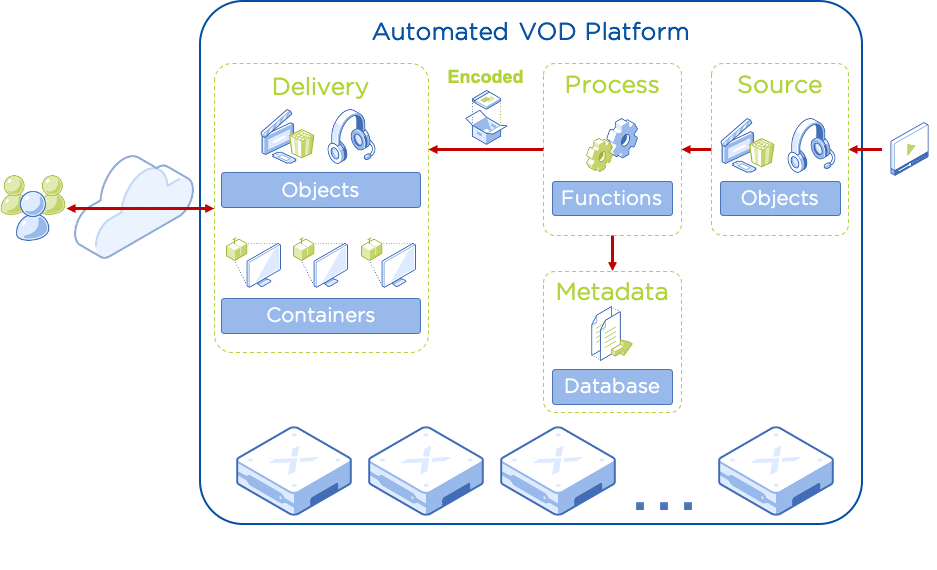

Architecture

The Nutanix products part of this solution for the delivery stage are:

- Hyperconverged Infrastructure. Intelligent infrastructure for delivering all applications, services and data at any scale.

- AHV. License-free virtualisation for your HCI platform.

- Objects. S3-compatible object storage that can be enabled with one-click.

- Karbon. License-free CNCF certified enterprise Kubernetes that can be enabled with one-click.

- Calm. Deliver hybrid cloud infrastructure and application services with automation and self-service.

The HCI platform runs AHV with Objects, Karbon and Calm services enabled. For the video content hosted in Objects as well as the frontend running in Kubernetes, I’m using a trial version of Unified Origin by Unified Streaming.

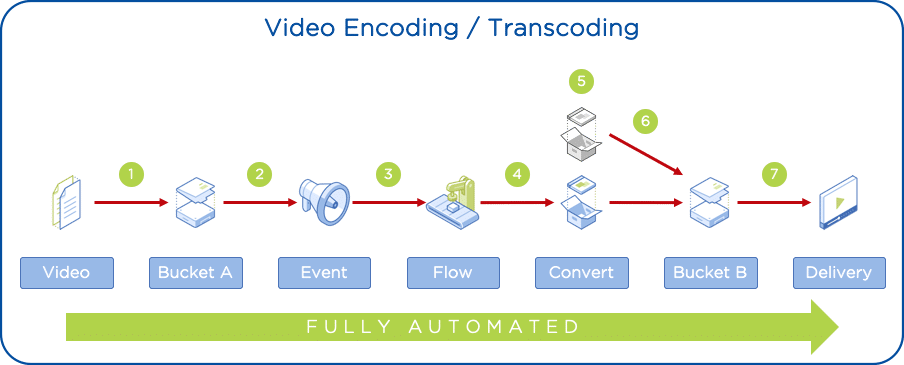

How it works

- A video file is uploaded to bucket A (raw-content)

- An event notification is sent by the Objects instance to NATS Streaming when an event type s3:ObjectCreated:Put is triggered

- Node-RED is subscribed to the NATS cluster and get a new event notification

- A flow is triggered sending a request to a function in Nuclio serverless

- The Python function in Nuclio downloads the video from bucket A and using FFMPEG creates four new MP4 files with different qualities plus a manifest.

- Resulting files are uploaded by the function to bucket B (vod-content)

- The content is available to be delivered by Unified Origin (based on Part 1)

Installation

This article presumes you have completed Part 1 and have available the following resources: - Cluster with AHV - Prism Central 5.17 or above with these services enabled: Calm 2.10 or above; Objects 2.2 or above; and Karbon 2.0.1 or above - A Karbon Kubernetes cluster - KUBECONFIG file for the Kubernetes cluster - Kubectl client - A working Objects instance - A trial license for Unified Streaming software - VLC media player - VOD Objects bucket (vod-content) - VOD Calm blueprint (k8s-vod) - Docker Hub account

Create raw-content bucket and grant access

In this step you will create an Objects bucket where the raw video files are uploaded. The user created in Part 1 will be entitled to read and write from this bucket too.

- Click Prism Central _> Services _> Objects

- Click on your Object Store instance

- Click Create Bucket

- Name: raw-content

- Click Create

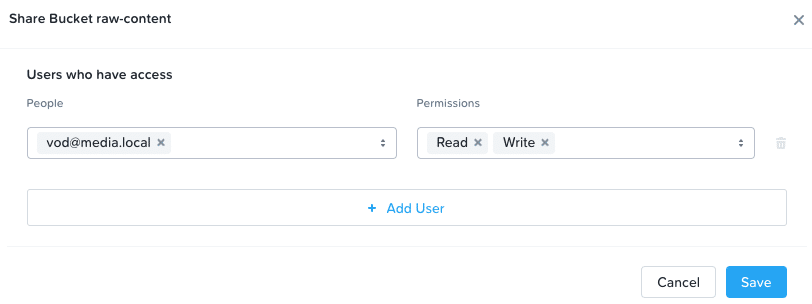

Finally, let’s entitle the user vod@media.local to read/write:

- Select raw-content bucket

- Click Actions _> Share

- People: vod@media.local

- Permissions: read/write

- Click Save

Deploy NATS Streaming

NATS is a modern messaging system that you will use to receive event notifications from Objects when a new file is uploaded or updated into the raw-content bucket.

For deploying NATS Server and NATS Streaming you will need access to a Kubernetes cluster.

- Create a namespace

kubectl create namespace nats

- Deploy NATS Server

kubectl -n nats apply -f https://raw.githubusercontent.com/nats-io/k8s/master/nats-server/single-server-nats.yml

- Change service to NodePort

kubectl -n nats patch svc nats --type='json' -p '[{"op":"replace","path":"/spec/type","value":"NodePort"}]'- Deploy NATS Streaming

kubectl -n nats apply -f https://raw.githubusercontent.com/nats-io/k8s/master/nats-streaming-server/single-server-stan.yml

- Check all the pods are running

kubectl -n nats get pods

You should get a similar output to the following.

NAME READY STATUS RESTARTS AGE

nats-0 1/1 Running 0 1m

stan-0 1/1 Running 0 1m38s

- Get service port and worker IP address

kubectl -n nats get services nats

Take note of the service port 4222 mapping for later. In my case the mapping for 4222 is 30175.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nats NodePort 172.19.41.39 <none> 4222:30175/TCP,6222:31085/TCP,8222:30938/TCP,7777:31856/TCP,7422:32385/TCP,7522:31152/TCP 13m

- For the Kubernetes worker IP address:

kubectl get nodes -o wide

My worker IP address is 192.168.108.251.

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

karbon-k8s-vod-97b11d-k8s-master-0 Ready master 31m v1.16.10 192.168.108.15 <none> CentOS Linux 7 (Core) 3.10.0-1127.8.2.el7.x86_64 docker://18.9.8

karbon-k8s-vod-97b11d-k8s-worker-0 Ready node 29m v1.16.10 192.168.108.251 <none> CentOS Linux 7 (Core) 3.10.0-1127.8.2.el7.x86_64 docker://18.9.8

Then my NATS instance is listening on 192.168.108.251:30175.

Enable Objects notifications

Now that you have a NATS Streaming instance, it’s time to configure the Objects notifications.

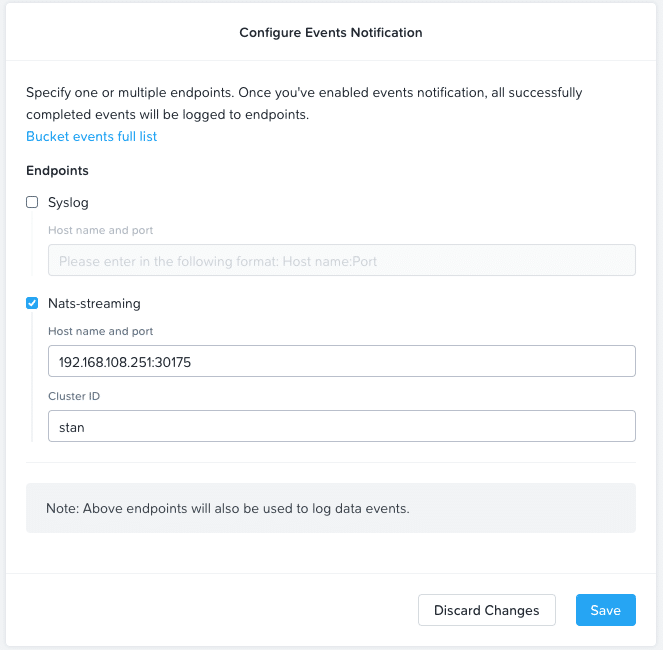

- In your Object Store instance click Settings __> Notification

- Enable Nats-streaming

- Host name and port: <K8s_Worker_IP>:<NATS_Service_Port>. Ex: 192.168.108.251:30175

- Cluster ID: stan

- Click Save

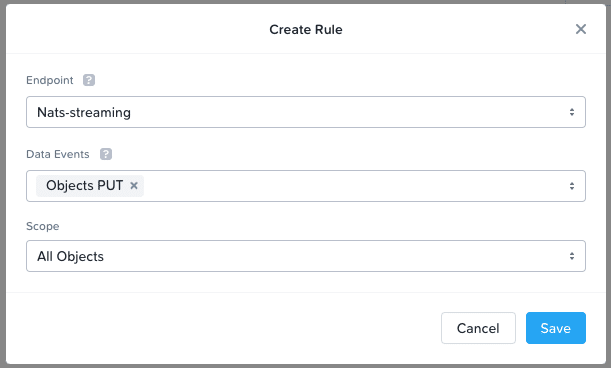

Now you need to select what buckets are entitled to send notifications. You will enable it on the raw-content bucket only.

- Select the raw-content bucket

- Click Actions __> Data Events Notification

- Click + Create Rule

- Endpoint: Nats-streaming

- Data Events: Objects PUT (you just want to trigger the function when a new video is uploaded/updated)

- Scope: All Objects

- Click Save

- Click Done

To confirm notifications are working, check the logs for the STAN pod in Kubernetes. You should see an entry at the end of the logs with the message: Channel “OSSEvents” has been created

- Check stan-0 pod logs

kubectl -n nats logs stan-0

You should get a similar output to the following:

[1] 2020/07/27 16:50:52.465221 [INF] STREAM: Starting nats-streaming-server[stan] version 0.16.2

[1] 2020/07/27 16:50:52.465281 [INF] STREAM: ServerID: DmUu734hZXN19tfecXCTAA

[...]

[1] 2020/07/27 16:50:52.728427 [INF] STREAM: ----------------------------------

[1] 2020/07/27 16:50:52.728429 [INF] STREAM: Streaming Server is ready

[1] 2020/07/27 16:58:49.403559 [INF] STREAM: Channel "OSSEvents" has been created

Creating a serverless function for encoding/transcoding

A Python function running in Nuclio is responsible to read the notification from Objects, download the file from raw-content bucket, encode/transcode with FFMPEG, and finally upload the new files into the vod-content bucket.

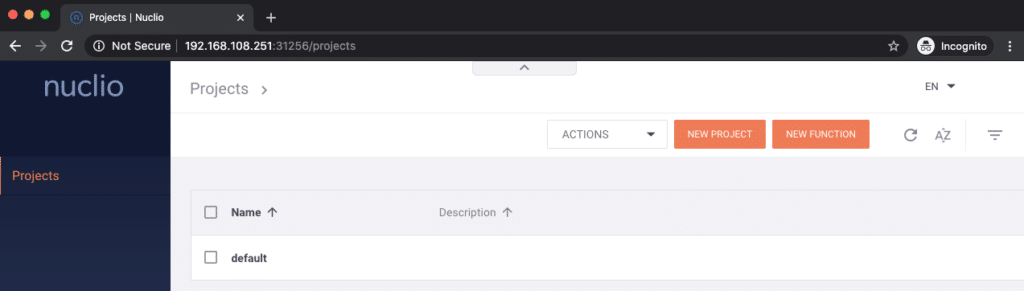

For running functions we are going to use Nuclio that is the simplest way to run functions in Kubernetes. Let’s deploy one in your Kubernetes cluster where you have NATS running.

- Create a namespace

kubectl create namespace nuclio

- Nuclio requires access to a container registry to push the resulting container image with the Python function. This example uses Docker Hub. You can create a free account if you don’t have already one. Replace the docker username with yours (ukdemo in my case)

read -s mypasswordkubectl -n nuclio create secret docker-registry registry-credentials \

--docker-username ukdemo \

--docker-password $mypassword \

--docker-server registry.hub.docker.com \

--docker-email ignored@nuclio.io

unset mypassword- Deploy Nuclio Server

kubectl apply -f https://raw.githubusercontent.com/nuclio/nuclio/master/hack/k8s/resources/nuclio-rbac.yaml

kubectl apply -f https://raw.githubusercontent.com/nuclio/nuclio/master/hack/k8s/resources/nuclio.yaml

- Change service to NodePort

kubectl -n nuclio patch svc nuclio-dashboard --type='json' -p '[{"op":"replace","path":"/spec/type","value":"NodePort"}]'- Check all the pods are running

kubectl -n nuclio get pods

You should get a similar output to the following.

NAME READY STATUS RESTARTS AGE

nuclio-controller-7b886dc747-5vxj8 1/1 Running 0 1m

nuclio-dashboard-69b5dd-22mlb 1/1 Running 0 1m

- Get service port

kubectl -n nuclio get services nuclio-dashboard

Take note of the service port 8070 mapping for later. In my case the mapping for 8070 is 31256.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nuclio-dashboard NodePort 172.19.197.179 <none> 8070:31256/TCP 1m

In your web browser open the Nuclio dashboard using one of your Kubernetes worker IP address and the Nuclio service port. In my case the URL is http://192.168.108.251:31256

- The function depends on the Objects keys for the vod@media.local user as well as the Unified Origin license key. Let’s create the Kubernetes secrets for them:

read -s accesskey | base64

read -s secretkey | base64

kubectl -n nuclio create secret generic objects-secret --from-literal=accesskey=$accesskey --from-literal=secretkey=$secretkey

read -s usp_license_key | base64

kubectl -n nuclio create secret generic usp-license --from-literal=usp_license_key=$usp_license_key

unset accesskey secretkey usp_license_key

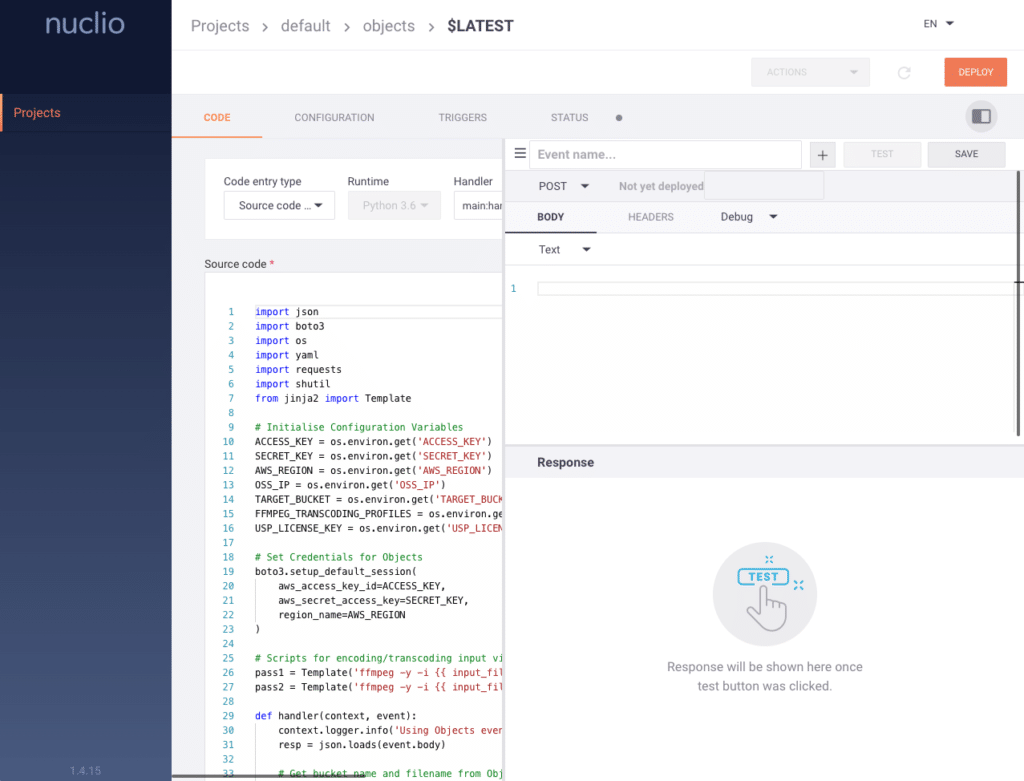

Instead of writing the Python function from scratch, you will import an example.

In the Nuclio dashboard, run the following steps:

- Click New function

- Click Import

- Paste the YAML content or click Import to select the YAML file. Get the file from here

- Click Create

You should get a screen like below.

Before you can deploy the function, make sure to update the values in the configuration tab.

- Click Configuration

- Scroll down

- Update the values for:

- OSS_IP. This is the IP address of your Nutanix Objects instance.

- TARGET_BUCKET. You should have already this bucket from Part 1. This is the bucket, vod-content, used to store the content for delivering.

- Click Deploy

Let see more in detail what happens when you click deploy:

- Nuclio builds a container image based on python:alpine. You can see this in the build section within the configuration tab for the function. Python:alpine is not the default Python image for Nuclio, but due to Unified Streaming software responsible to create the menu file with the video content, and also the FFMPEG library to encode/transcode the video content, the python:alpine image is needed.

- The container image is pushed into the container registry. In this example, into Docker Hub.

- Nuclio will use then the container image to launch a Kubernetes application deployment and publish this as a Kubernetes NodePort service.

kubectl -n nuclio get deployments && kubectl -n nuclio get services

NAME READY UP-TO-DATE AVAILABLE AGE

nuclio-controller 1/1 1 1 2d4h

nuclio-dashboard 1/1 1 1 2d4h

nuclio-objects 1/1 1 1 1m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nuclio-dashboard NodePort 172.19.253.83 <none> 8070:31256/TCP 2d4h

nuclio-objects NodePort 172.19.196.129 <none> 8080:32607/TCP 1m- Nuclio-objects deployment and service is the function that you just deployed. You can check if the function is alive opening a web browser and using the IP address of one of your Kubernetes worker and the port allocated to the service (nuclio-objects), in my case port 32607. You will get an error because the Python function is expecting the input from the event generated by Objects that we are not sending when testing from the browser. Take note of the function port because you will need it for the event automation in Node-RED.

Your Python function is ready to receive requests from Node-RED.

Configuring event-driven automation

Serverless solutions like Nuclio, Knative, OpenFaaS and others don’t support NATS Streaming yet, only NATS Server. For this reason we need a “connector” between NATS Streaming and Nuclio.

This “connector” will run in Node-RED, a famous event-driven automation solution in the IoT space.

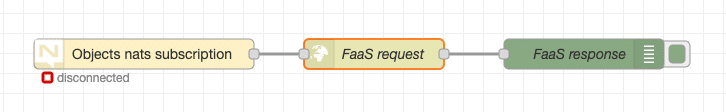

This is the flow that you will import:

- The flow subscribes to the OSSEvents channel in NATS Streaming

- When an event is received, a HTTP POST request to the Python function URL is performed, sending the Objects event details in the body of the request

- The function will extract the details and build the path to download the file from raw-content bucket, encode/transcode the file, and upload the new resulting files into the vod-content bucket

- When the function finishes, then a response with Done is returned

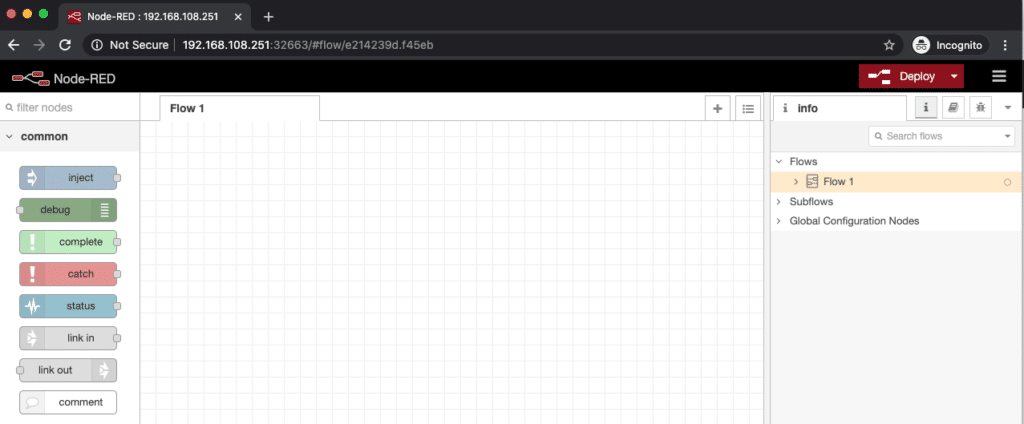

Let’s deploy Node-RED in the Kubernetes cluster first:

- Create a namespace

kubectl create namespace node-red

- Deploy Node-RED

kubectl -n node-red apply -f https://raw.githubusercontent.com/pipoe2h/ntnx-objects-nats/master/kubernetes/node-red.yaml

- Check the pod is running

kubectl -n node-red get pods

You should get a similar output to the following.

NAME READY STATUS RESTARTS AGE

nodered-0 1/1 Running 0 1m

- Get service port

kubectl -n node-red get services

Take note of the service port 1880 mapping for later. In my case the mapping for 1880 is 32663.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-red NodePort 172.19.128.144 <none> 1880:32663/TCP 1m

In your web browser open the Node-RED dashboard using one of your Kubernetes worker IP address and the Node-RED service port. In my case the URL is http://192.168.108.251:32663

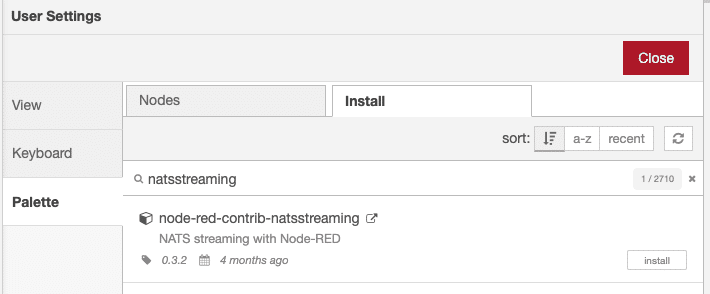

Node-RED needs the palette for NATS Streaming. To install this:

- Click the hamburger on the top right corner

- Click Manage palette

- Click Install

- Search for natsstreaming

- Click Install for the palette node-red-contrib-nasstreaming

- Click Close

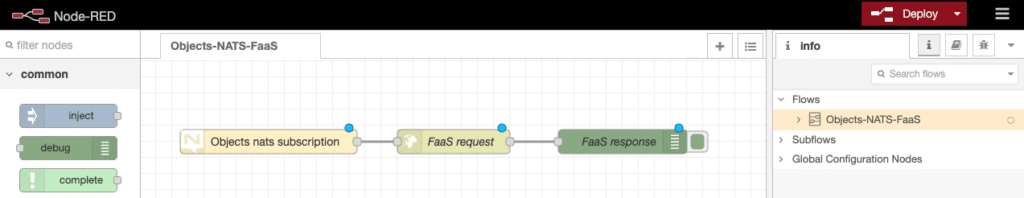

Like you did with Nuclio before, you will import a flow into Node-RED so you don’t need to create this from scratch.

- Click the hamburger on the top right corner

- Click Import

- Paste the JSON content or click select a file to import to select the JSON file. Get the file from here

- A new flow called Objects-NATS-FaaS is created

- To delete the empty Flow 1, make sure you have it selected first

- Click the hamburger on the top right corner

- Click Flows __> Delete

- You should have a single flow now

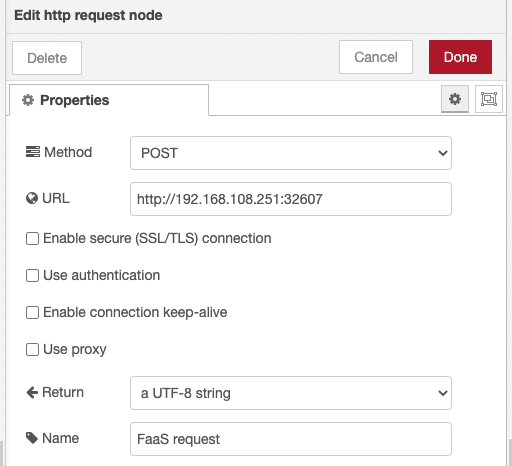

Next is to update the value for the function URL in the flow.

- Double-Click FaaS request object

- Update URL with your function URL that you collected from previous section. In my case http://192.168.108.251:32607

- Click Done

The flow is ready for deployment. Click Deploy on the top right corner.

If your NATS subscription shows as disconnected don’t worry, this is a common behaviour when enabling NATS durable subscription in Node-RED.

It retries after 60 seconds, if you don’t see the status changing to connected, then you may have an issue with your NATS configuration.

Your Node-RED event is now ready to listen events coming from NATS and request the Python function to Nuclio.

Testing the solution

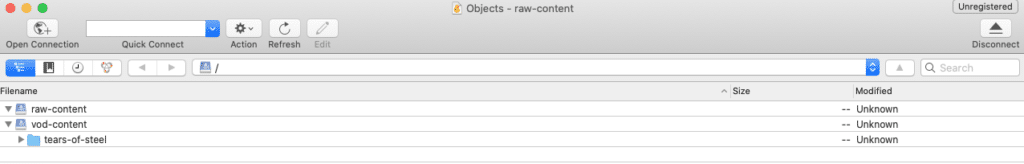

The solution is ready to be tested. You need a sample video that can be uploaded to the raw-content bucket using a client like Cyberduck.

- In a terminal let’s monitor the Kubernetes pod for the Python function so you can see the FFMPEG output during the video encoding/transcoding

kubectl -n nuclio logs -f -l nuclio.io/function-name=objects

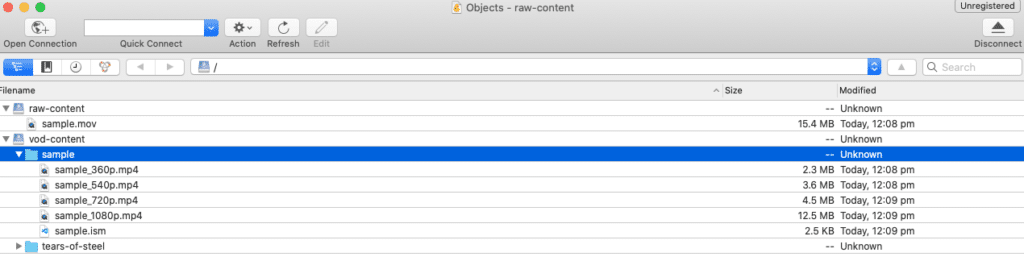

- Open Cyberduck and create a connection if you don’t have one to your Objects instance using the credentials for vod@media.local account. You should see two buckets, the new one created during this blog named raw-content, and a second bucket from Part 1 named vod-content

- Upload your sample video to the raw-content bucket and have a look to the pod log output. Also, in Node-RED you should see the step FaaS request with the status requesting

- Depending on the size of your video the total time will vary. Once the process has finished and you get the Done in Node-RED, you will get a new folder in the vod-content bucket with several video files and the manifest for Unified Origin

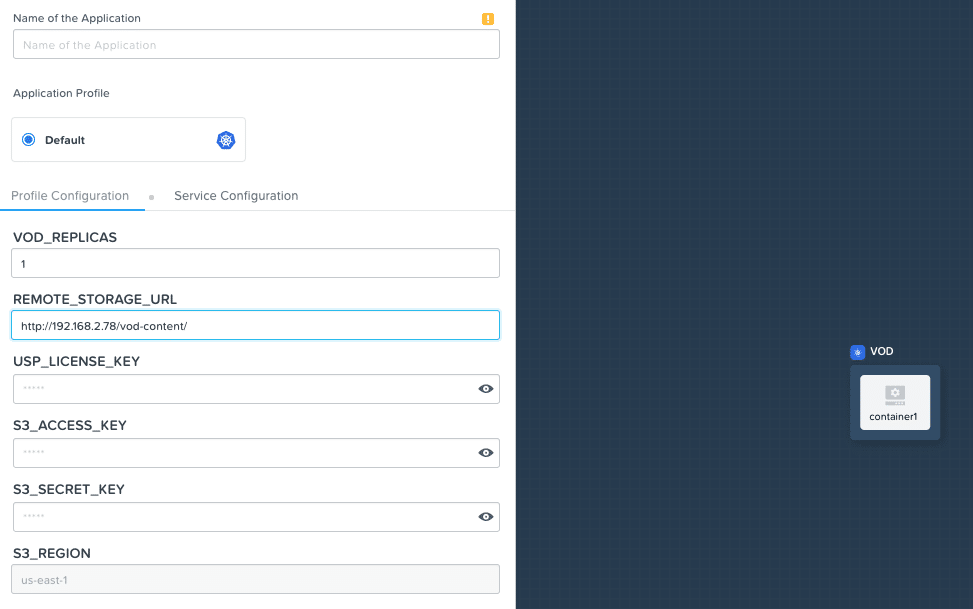

Playing your video

The last step is to watch the video with VLC and confirm the content is delivered by Unified Origin. For this task you will use the Calm blueprint that you create in Part 1. There is a little difference, this time the variable REMOTE_STORAGE_URL points to the vod-content bucket (http://<Objects_Instance_IP>/vod-content/) and not any specific folder so you can get access to all the different videos.

To avoid any conflict with the previous Kubernetes deployment, the recommendation is to delete any application you have running with Unified Origin and deploy a new one.

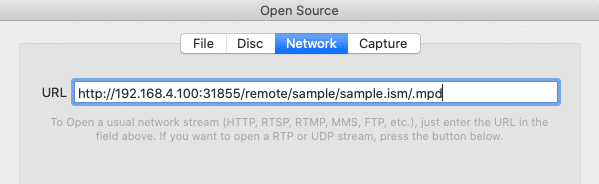

Once the Calm application is running, open your VLC player and add a network source http://<K8sWorker>:<port>/remote/<new_folder_name>/<file.ism>/.mpd and click Open. To get the details fo your Kubernetes worker IP address as well as the new service port, you can refer to the section Playing the Video Content in Part 1. In my case the video file name I uploaded was sample.mov, then the folder is named sample and the manifest sample.ism

After few seconds the video should start playing in VLC.

Conclusion

Use cloud native services to modernise your content delivery is not something that public cloud is only able to offer, with Nutanix DevOps solution you can run cloud native applications anywhere, on-prem or in the public cloud with Nutanix Clusters. From object storage, to Kubernetes clusters, end-to-end automation and more.