Summary

Large Language Models (LLMs) based on the transformer architecture (for example, GPT, T5, and BERT), have achieved state-of-the-art (SOTA) results in various Natural Language Processing (NLP) tasks, especially for text generation and conversational bots. The real benefits of LLMs can be tapped only when they can be translated into applications which generate end-user values. LLM application development involves LLM service, data connectors, raw data to prompt converter template, output parsing, and operationalizing auxiliary services such as vector databases, message queue, and others. As applications become more heterogeneous, an LLM application becomes prohibitively complex with multiple moving pieces. Another design challenge comes from data security with many LLM apps depending on third-party API services from organizations such as OpenAI, Hugging Face. In this scenario, zero-trust organizations such as banks, hospitals, and similar organizations demand security posture hardening by running the LLM models private and eliminating the need for the third-party API services. In this context, LangChain is a prevalent orchestration platform to glue together different application components.

In this article, we present how a private LLM application can be developed using LangChain on Nutanix Cloud Platform (NCP), which is optimized for easy manageability and resiliency with state-of-the-art AI infrastructure including high-performance GPU, fast NVMe, and storage scaling.

Benefits of Private LLM Applications

Some of the reasons why you may need to run your model locally, and not use an external API server, include: Local model hosting is preferable over sending POST request to an external API server for the following reasons:

- Security: As you send data to external API servers, it compromises the enterprise attack services, increasing the risk of the man-in-the-middle (MITM) attack.

- Cost: As you send data to an external API, there is a cost associated with data transfer.

- Performance: The risk of downtime due to traffic congestion, configuration, and slow down due to long-tail latency increase significantly as you introduce an external API in your critical application component.

LangChain

The burgeoning LLM ecosystem comes with three specialized domains: first, foundation models and related algorithmic techniques such as instruction-tuning, PEFT, QLoRA, quantization, in-context learning, reinforcement learning on human feedback; second, infrastructure resource related elements such as compute accelerators, MLflow, deepspeed, and application development platforms such as LangChain, LlamaIndex. LangChain is an orchestration framework for developing applications powered by language models. It is claimed to be data-aware, meaning they allow an LLM model to interact with data sources, and agentic, meaning they allow an LLM model to interact with its environment. It comes with modules such as model I/O, data connection, chains, agents, memory and callbacks.

Model I/O

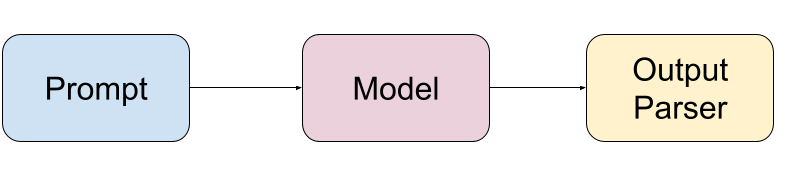

LangChain provides the constituents to interface with any language model. The key constituents are: prompts to templatize, dynamically select, and manage model inputs; model to invoke the LLM service; and output parser to format model output. Figure 1 shows how different Model I/O components interact.

Data Connection

Many LLM applications need user-specific data that is not part of the model’s training set. LangChain offers an ETL pipeline to load, transform, store and query your data with its Document loaders, Document transformers, Text embedding models, Vector stores, and Retrievers.

Chain

Chains enable users to combine multiple components together to compose a single, coherent application. For example, a chain takes user input, formats it with a PromptTemplate, and then passes the formatted response to an LLM. More complex chains can combine multiple chains together. The foundational pieces for LangChain are LLM, router, sequential, and transformation.

Memory

Most LLM applications are centered around a conversational interface. It demands reference to information introduced earlier in a conversation. At its core, a conversational interface needs access to some window of previous messages. A more complex system needs a world model which allows it to do things like maintain information about entities and their relationships. The ability to reference past information is called memory. A memory system requires basic capabilities such as reading and writing.

Agent

Agent can be dubbed as an automated chain that uses an LLM to choose a sequence of actions. In chains, a sequence of actions is hard-coded. In agents, an LLM is used as a reasoning engine to determine which actions to take and in which order.

Callbacks

A callbacks system allows hooking into the various events of your LLM application. This is useful for logging, monitoring, streaming, and other tasks.

Nutanix Cloud Platform

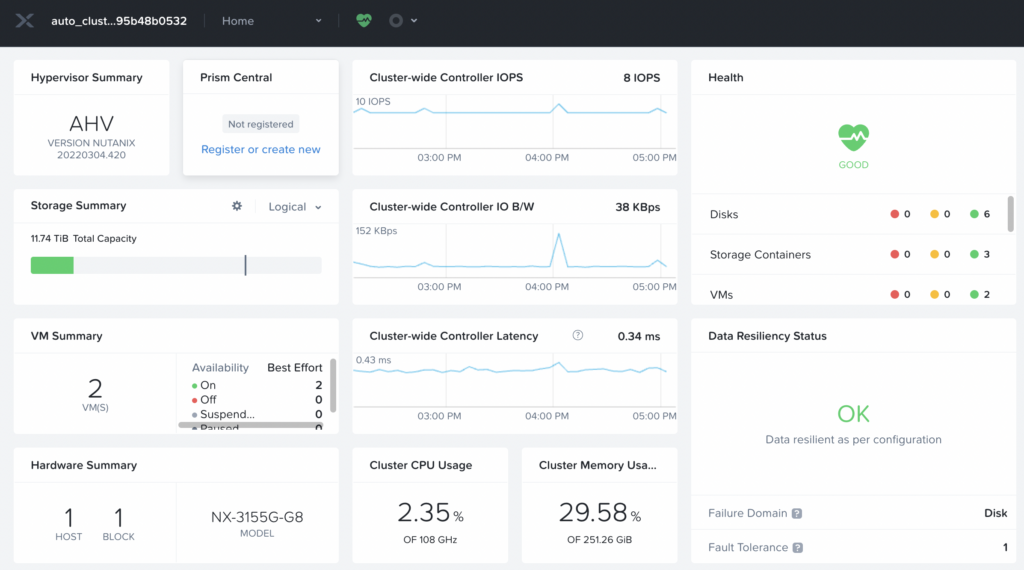

With Nutanix Cloud Platform, Nutanix delivers the simplicity and agility of a public cloud alongside the performance, security, and control of a private cloud. At Nutanix, we are dedicated to enabling customers with the ability to build and deploy intelligent applications anywhere—edge, core data centers, service provider infrastructure, and public clouds. We offer a zero-touch AI infrastructure which reduces the infrastructure configuration and maintenance burden on machine learning scientists and data engineers. Prism Element (PE) is a service built into the platform for every Nutanix cluster deployed. Prism Element enables a user to fully configure, manage, and monitor Nutanix clusters running any hypervisor. Therefore, the first step of the Nutanix infrastructure setup is to log into a Prism Element, as shown in Figure 3.

Log into Prism Element on a Cluster (UI shown in Figure 2)

Set up the VM

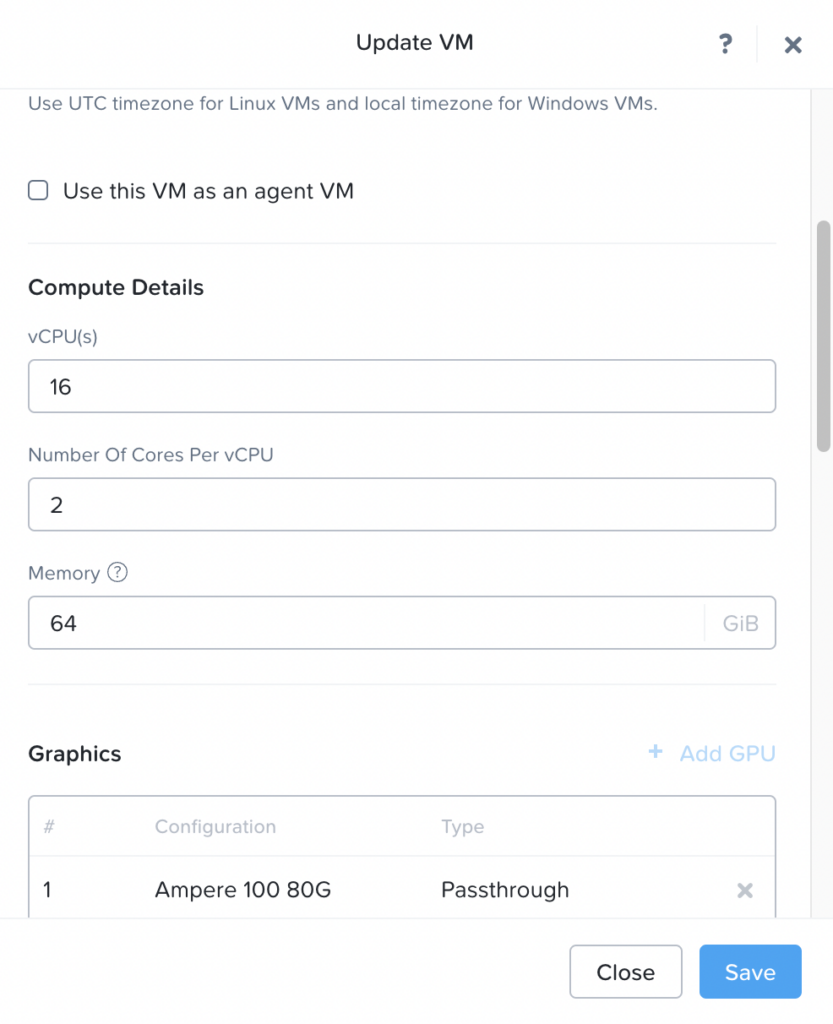

After logging into Prism Element, we create a VM hosted on our Nutanix AHV cluster. As shown in Figure 4, the VM has following resource configuration settings: 22.04 Ubuntu operating system, 16 single core vCPUs, 64 GB of RAM, and NVIDIA A100 Tensor Core passthrough GPU with 80GB memory. The GPU is installed with the NVIDIA RTX 15.0 driver for Ubuntu OS (NVIDIA-Linux-x86_64-525.60.13-grid.run). The large deep learning models with transformer architecture require GPU or other compute accelerators with high memory bandwidth, large registers and L1 memory.

Underlying A100 GPU

NVIDIA A100 Tensor Core GPU is designed to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC. Powered by the NVIDIA Ampere Architecture, A100 is the engine of the NVIDIA data center platform. A100 provides up to 20X higher performance over the prior generation and can be partitioned into seven GPU instances to dynamically adjust to shifting demands. To peek into the detailed features of A100 GPU, we run `nvidia-smi` command which is a command line utility, based on top of the NVIDIA Management Library (NVML), intended to aid in the management and monitoring of NVIDIA GPU devices. The output of the `nvidia-smi` command is shown in Figure 5. It shows the Driver Version to be 515.86.01 and CUDA version to be 11.7.

Table 1 shows several key features of the A100 GPU we used.

| Feature | Value | Description |

|---|---|---|

| GPU | 0 | GPU Index |

| Name | NVIDIA A100 | GPU Name |

| Temp | 35 C | Core GPU Temperature |

| Perf | P0 | GPU Performance |

| Persistence-M | On | Persistence Mode |

| Pwr: Usage/Cap | 65 W / 300 W | GPU Power Usage and it capability |

| Bus Id | 00000000:00:06.0 | domain:bus:device.function |

| Disp. A | Off | Display Active |

| Memory-Usage | 18884MiB / 40960MiB | Memory allocation out of total memory |

| Volatile Uncorr. ECC | 0 | Counter of uncorrectable ECC memory error |

| GPU-Util | 0% | GPU Utilization |

| Compute M. | Default | Compute Mode |

| MIG M. | Disabled | Multi-Instance Mode |

Implementation of Private LLM

We ran an LLM service on an instance of the Nutanix Cloud Platform as described in the previous section. The LLM service features a Question-Answering API. It has been tested with ten prompts and queries, as shown in Table 2. The first three prompts are of type Knowledge Retrieval and the remaining seven questions are of type logical reasoning, cause and effect, analogical reasoning, inductive reasoning, deductive reasoning, counterfactual reasoning, and in context, respectively.

| Index | Prompt | Type |

|---|---|---|

| 1 | What is the capital of the United States? | Knowledge Retrieval |

| 2 | What is the capital of India? | Knowledge Retrieval |

| 3 | What is the capital of China? | Knowledge Retrieval |

| 4 | What is the next number in the sequence: 1, 1, 2, 3, 5, 8, …? If all cats have tails, and Fluffy is a cat, does Fluffy have a tail? | Logical Reasoning |

| 5 | If you eat too much junk food, what will happen to your health? How does smoking affect the risk of lung cancer? | Cause and Effect |

| 6 | In the same way pen is related to paper, what is fork related to? If tree is related to forest, what is brick related to? | Analogical Reasoning |

| 7 | Every time John eats peanuts, he gets a rash. Does John have a peanut allergy? Every time Sarah studies for a test, she gets an A. Will Sarah get an A on the next test if she studies? | Inductive Reasoning |

| 8 | All dogs have fur. Max is a dog. Does Max have fur? If it is raining outside, and Mary does not like to get wet, will Mary take an umbrella? | Deductive Reasoning |

| 9 | If I had studied harder, would I have passed the exam? What would have happened if Thomas Edison had not invented the light bulb? | Counterfactual Reasoning |

| 10 | The center of Tropical Storm Arlene, at 02/1800 UTC, is near 26.7N 86.2W. This position is about 425 km/230 nm to the west of Fort Myers in Florida, and it is about 550 km/297 nm to the NNW of the western tip of Cuba. The tropical storm is moving southward, or 175 degrees, 4 knots. The estimated minimum central pressure is 1002 mb. The maximum sustained wind speeds are 35 knots with gusts to 45 knots. The sea heights that are close to the tropical storm are ranging from 6 feet to a maximum of 10 feet. Precipitation: scattered to numerous moderate is within 180 nm of the center in the NE quadrant. Isolated moderate is from 25N to 27N between 80W and 84W, including parts of south Florida. Broad surface low pressure extends from the area of the tropical storm, through the Yucatan Channel, into the NW part of the Caribbean Sea. Where and when will the storm make landfall? | In context |

Application Components

It uses three Python packages: transformers, langchain, and torch.

From transformers (developed by HuggingFace) package, it uses three modules: AutoTokenizer, AutoModelForSeq2SeqLM, Pipeline

- Hf AutoTokenizer: This is a generic tokenizer class that will be instantiated as one of the tokenizer classes of the library when created with the AutoTokenizer.from_pretrained() class method. The relevant statement for this instantiation:

tokenizer = AutoTokenizer.from_pretrained(model_id)

- Hf AutoModelForSeq2SeqLM: This is a generic model class that will be instantiated as one of the model classes of the library—with a sequence-to-sequence language modeling head—when created with the when created with the from_pretrained() class method or the from_config() class method. The relevant statement for this instantiation:

model = AutoModelForSeq2SeqM.from_pretrained(model_id)

- Hf Pipeline: Pipelines are objects that abstract most of the complex code from the library, offering a simple API dedicated to several tasks, including Named Entity Recognition, Masked Language Modeling, Sentiment Analysis, Feature Extraction and Question Answering. It comes in two flavors: first, pipeline() which is the most powerful object encapsulating all other pipelines. Second, domain-specific pipelines are available for audio, computer vision, natural language processing, and multimodal tasks. The relevant statement in the implementation:

pipe = pipeline(“text2textgeneration”, model=model, tokenizer=tokenizer, max_length=512)

From the LangChain package, the application uses the HuggingFacePipeline class from llms interface. HuggingFacePipeline facilitates local deployment of HuggingFace models. The implementation can be found in: Link

Pre-trained Models Used

The application was developed with five different pre-trained models based on T5 transformer architecture class and their parameter counts are shown in Table 3. The smallest model, google/flan-t5-small has 80M parameters, while the largest model google/flan-t5-xxl has 11B parameters. These models use different mixtures of datasets such as CoT, Muffin, T0-SF, NIV2.

| Model Name | Parameter Size |

|---|---|

| google/flan-t5-small | 80 M |

| google/flan-t5-base | 250 M |

| google/flan-t5-large | 780 M |

| google/flan-t5-xl | 3 B |

| google/flan-t5-xxl | 11 B |

Workflow Sequence

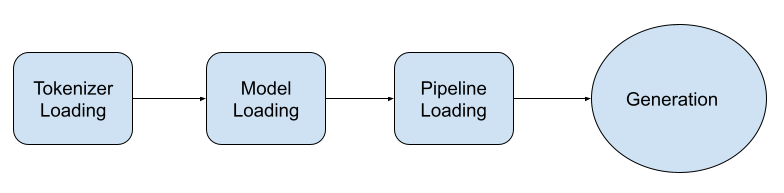

This application uses pre-train models directly without any finetuning. It uses a four step sequence for the answer generation from a question, as shown in Figure 5.

Table 4 shows tokenizer loading times for five different models. Apparently, all models have comparable tokenizer loading times because all models use a similar tokenizer class.

| Model Name | Tokenizer Loading Time (s) |

|---|---|

| google/flan-t5-small | 0.26 |

| google/flan-t5-base | 0.14 |

| google/flan-t5-large | 0.14 |

| google/flan-t5-xl | 0.14 |

| google/flan-t5-xxl | 0.15 |

Table 5 shows model loading times for five different models. Apparently, the models with more parameters take more time to load.

| Model Name | Model Loading Time (s) | Parameter Count |

|---|---|---|

| google/flan-t5-small | 1.3 | 80 M |

| google/flan-t5-base | 3.5 | 250 M |

| google/flan-t5-large | 9.8 | 780 M |

| google/flan-t5-xl | 27.0 | 3 B |

| google/flan-t5-xxl | 93.3 | 11 B |

Table 6 shows pipeline loading times for five different models. Apparently, all models have comparable tokenizer loading times because all models use a similar pipeline class.

| Model Name | Pipeline Loading Time (s) |

|---|---|

| google/flan-t5-small | 0.05 |

| google/flan-t5-base | 0.02 |

| google/flan-t5-large | 0.05 |

| google/flan-t5-xl | 0.19 |

| google/flan-t5-xxl | 0.62 |

Effectiveness of Different Models in QA Tasks

Tables 7-9 shows the performance for five different models for three QA tasks of knowledge retrieval type. In general, the accuracy score increases with more complex models such as google/flan-t5-xxl, while the answer generation time also increases with the model complexity.

| What is the capital of the United States of America? (Knowledge Retrieval) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | San Francisco | San Francisco | washington | washington dc | washington dc |

| Accuracy Estimate | 0 | 0 | 0.7 | 1.0 | 1.0 |

| Generation Time (s) | 0.06 | 0.12 | 0.16 | 0.72 | 2.85 |

| What is the capital of India? (Knowledge Retrieval) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | sarajevo | chennai | calcutta | bhopal | new delhi |

| Accuracy Estimate | 0 | 0 | 0 | 0 | 1.0 |

| Generation Time (s) | 0.05 | 0.1 | 0.26 | 0.62 | 2.49 |

| What is the capital of China? (Knowledge Retrieval) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | shanghai | qingdao | beijing | beijing | beijing |

| Accuracy Estimate | 0 | 0 | 1 | 1 | 1 |

| Generation Time (s) | 0.05 | 0.12 | 0.19 | 0.52 | 2.00 |

Table 10 shows the performance for five different models for a QA task of logical reasoning type. In general, the accuracy remains high for all four models except google/flan-f5-small, while the answer generation time increases steadily with the model complexity. It indicates that it might not be a good idea to use a too complex model.

| What is the next number in the sequence: 1, 1, 2, 3, 5, 8, …? If all cats have tails, and Fluffy is a cat, does Fluffy have a tail? (Logical Reasoning) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | 1 | yes | yes | yes | yes |

| Accuracy Estimate | 0 | 1 | 1 | 1 | 1 |

| Generation Time (s) | 0.03 | 0.06 | 0.16 | 0.45 | 1.47 |

Table 11 shows the performance for five different models for a QA task of cause and effect type. The accuracy score is high for google/flan-t5-xl and google/flan-t5-xxl models. Surprisingly, google/flan-t5-base produces an accurate answer. In general, the answer generation time increases steadily with the model complexity.

| If you eat too much junk food, what will happen to your health? How does smoking affect the risk of lung cancer? (Cause and Effect) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | if you eat too much junk food, you will have a higher risk of lung cancer | Smoking increases the risk of lung cancer. | no | It increases the risk of developing lung cancer. | Smoking increases the risk of lung cancer. |

| Accuracy Estimate | 0 | 1 | 0 | 1 | 1 |

| Generation Time (s) | 0.16 | 0.17 | 0.13 | 1.15 | 3.6 |

Table 12 shows the performance for five different models for a QA task of analogical reasoning type. The accuracy score is high for google/flan-t5-xl and google/flan-t5-xxl models. In general, the answer generation time increases steadily with the model complexity.

| In the same way that pen is related to paper, what is fork related to? If tree is related to forest, what is brick related to? (Analogical Reasoning) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | wood | tree | brick is related to brick | building | building |

| Accuracy Estimate | 0 | 0 | 0 | 1 | 1 |

| Generation Time (s) | 0.03 | 0.24 | 0.26 | 0.46 | 1.33 |

Table 13 shows the performance for five different models for a QA task of inductive reasoning type. All five models produce accurate answers. In general, the answer generation time increases steadily with the model complexity. From these observations, we can empirically conclude that it might not make sense to use too complex models.

| Every time John eats peanuts, he gets a rash. Does John have a peanut allergy? Every time Sarah studies for a test, she gets an A. Will Sarah get an A on the next test if she studies? (Inductive Reasoning) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | yes | yes | yes | yes | yes |

| Accuracy Estimate | 1 | 1 | 1 | 1 | 1 |

| Generation Time (s) | 0.03 | 0.06 | 0.15 | 0.48 | 1.52 |

Table 14 shows the performance for five different models for a QA task of deductive reasoning type. All models except 80M parameter google/flan-t5-small produce accurate answers. In general, the answer generation time increases steadily with the model complexity. From these observations, we can empirically conclude that it might not make sense to use too complex models.

| All dogs have fur. Max is a dog. Does Max have fur? If it is raining outside, and Mary does not like to get wet, will Mary take an umbrella? (Deductive Reasoning) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | no | Mary will take an umbrella. | yes | yes | yes |

| Accuracy Estimate | 0 | 1 | 1 | 1 | 1 |

| Generation Time (s) | 0.03 | 0.13 | 0.14 | 0.45 | 1.4 |

Table 15 shows the performance for five different models for a QA task of counterfactual reasoning type. The accuracy assessment was somewhat subjective depending on the nature of the question. In general, the larger models perform better. Also, the answer generation time increases steadily with the model complexity. From these observations, we can empirically conclude that it might be safer to use complex models.

| If I had studied harder, would I have passed the exam? What would have happened if Thomas Edison had not invented the light bulb? (Counterfactual Reasoning) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | no | The light bulb would have been invented by Thomas Edison. | no one would have invented the light bulb | the world would be dark | the world would be a darker place |

| Accuracy Estimate | 0 | 0 | 0.2 | 0.2 | 0.2 |

| Generation Time (s) | 0.02 | 0.2 | 0.37 | 0.79 | 3.43 |

Table 16 shows the performance for five different models for a QA task of counterfactual reasoning type. The accuracy assessment was somewhat subjective depending on the nature of the question. In general, the larger models perform better. Also, the answer generation time increases steadily with the model complexity. From these observations, we can empirically conclude that it might be safer to use complex models.

| The center of Tropical Storm Arlene, at 02/1800 UTC, is near 26.7N 86.2W. This position is about 425 km/230 nm to the west of Fort Myers in Florida, and it is about 550 km/297 nm to the NNW of the western tip of Cuba. The tropical storm is moving southward, or 175 degrees, 4 knots. The estimated minimum central pressure is 1002 mb. The maximum sustained wind speeds are 35 knots with gusts to 45 knots. The sea heights that are close to the tropical storm are ranging from 6 feet to a maximum of 10 feet. Precipitation: scattered to numerous moderate is within 180 nm of the center in the NE quadrant. Isolated moderate is from 25N to 27N between 80W and 84W, including parts of south Florida. Broad surface low pressure extends from the area of the tropical storm, through the Yucatan Channel, into the NW part of the Caribbean Sea. Where and when will the storm make landfall? (In Context) | |||||

|---|---|---|---|---|---|

| google/flan-t5-small | google/flan-t5-base | google/flan-t5-large | google/flan-t5-xl | google/flan-t5-xxl | |

| Answer | between 80W and 84W | 2/1800 UTC | about 425 km/230 nm to the west of Fort Myers in Florida, and it is about 550 km/297 nm to the NNW of the western tip of Cuba | Fort Myers in Florida | 425 km/230 nm to the west of Fort Myers in Florida, and it is about 550 km/297 nm to the NNW of the western tip of Cuba. The tropical storm is moving southward, or 175 degrees, 4 knots. The estimated minimum central pressure is 1002 mb. The maximum sustained wind speeds are 35 knots with gusts to 45 knots. The sea heights that are close to the tropical storm are ranging from 6 feet to a maximum of 10 feet. Precipitation: scattered to numerous moderate is within 180 nm of the center in the NE quadrant. Isolated moderate is from 25N to 27N between 80W and 84W, including parts of south Florida. Broad surface low pressure extends from the area of the tropical storm, through the Yucatan Channel, into the NW part of the Caribbean Sea. Where and when will the storm make landfall? |

| Accuracy Estimate | 0.1 | 0.1 | 0.2 | 0.2 | 0.6 |

| Generation Time (s) | 0.08 | 0.16 | 1.85 | 1.25 | 68.34 |

Model Choice Recommendation

The effectiveness assessment of five different models has empirically led us to a model choice policy depending on the type of QA type. In general, the computation time increases across all model types. The question is whether that extra computation leads to superior accuracy scores? From Table 7-16, we can conclude that whether the accuracy improves with larger models is subject to QA type.

| QA Type | Recommendation |

|---|---|

| Knowledge Retrieval | Accuracy improves with model complexity. Hence, use a larger model. |

| Logical Reasoning | Accuracy does not improve with model complexity. Hence, use a mid-size model. |

| Cause and Effect | Accuracy improves with model complexity. Hence, use a larger model. |

| Analogical Reasoning | Accuracy improves with model complexity. Hence, use a larger model. |

| Inductive Reasoning | Accuracy does not improve with model complexity. Hence, use a mid-size model. |

| Deductive Reasoning | Accuracy does not improve with model complexity. Hence, use a mid-size model. |

| Counterfactual Reasoning | Accuracy improves with model complexity. Hence, use a larger model. |

| In context | Accuracy improves with model complexity. Hence, use a larger model. |

Conclusion

In this article, we demonstrate how we can use the Nutanix Cloud Platform to use LangChain and build a private LLM application. It discusses the implementation of LangChain development. For reproducibility, we are releasing the underlying code: Git Repo. We also cover LLM benchmarking in the context of the evaluation of our QA app. We evaluate the QA app with 10 different prompts covering seven different logical patterns for five architectures of flan-T5 models. We also come up with an empirical recommendation policy for model selection depending on the QA type.

Moving Forward

This is the sixth blog on the topic of AI readiness for Nutanix. The previous blogs can be found here: Link. In the future, we are planning to publish articles on foundation models, RLHF, and instruction tuning.

Acknowledgement

The authors would like to acknowledge the contribution of Johnu George, Staff Engineer at Nutanix.