Introduction

Today, every company is exploring various opportunities in the space of Artificial Intelligence (AI). AI has the potential to transform a wide range of industries and applications including Healthcare, Natural Language Processing (NLP), Fraud Detection, Autonomous Vehicles, Robotics, Financial Analysis, and so on. At Nutanix, we have over 20,000 customers across various industries that can benefit from AI with Nutanix’s AI-ready infrastructure platform services.

The Challenges of MLOps

Seamless and portable Machine Learning Operations (MLOps) is a key piece of an AI-ready infrastructure. MLOps workflow involves data harvesting, data engineering, model training, model inference, model storage, and monitoring. A central challenge of MLOps is data locality and model refresh with changing data quality. For example, in an autonomous car, telemetry data is generated locally, but would need to be replicated to another site to retrain the models for better predictions and improved accuracy. To address this problem, we offer an MLOps continuum from edge to cloud.

How the Nutanix Cloud Platform helps

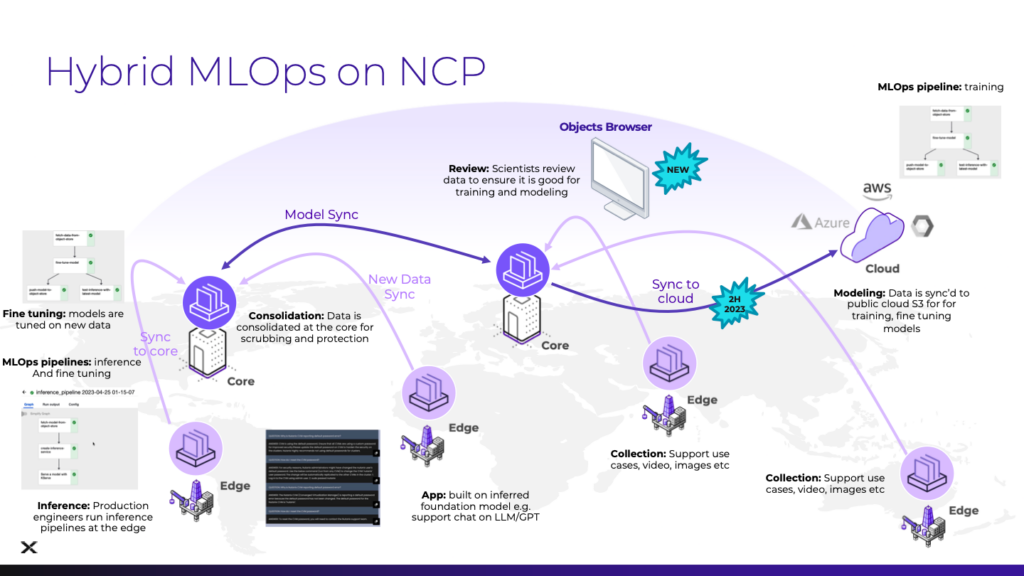

As announced in Nutanix’s 2023 .NEXT global conference, Nutanix is AI-ready to serve our customers’ AI needs with Nutanix Cloud Platform (NCP). Similar to our DataOps continuum via Nutanix object storage from Edge to Cloud, we now provide an MLOps continuum from Edge to Cloud along with consolidated MLOps, as shown in Figure 1.

However, you must be wondering what do I mean by MLOps continuum from Edge to Cloud and data locality, right?

Recently, there has been a significant shift towards edge computing where data is generated and processed in edge devices such as retail point of sales systems. This shift, combined with exponentially increasing data generation, creates a growing need for more decentralized computing connected back to centralized enterprise data centers and public clouds. For this reason, our MLOps continuum spans from Edge to Cloud, satisfying the platform needs of various optimization and management techniques of LLM deployments, retraining and fine-tuning at each stage.

Demo

Here is an example of an AI application running on Nutanix Cloud Platform. The Nutanix Cloud Platform provides a data platform to ingest the data into a KubeFlow pipeline, training the models in data-centers or the cloud, then optimizing model inference at run-time and deploying the models at the Edge seamlessly. The input data can be multi-modal in the form of text, images, audio or video.

Video 1: Demo Video

The video below demonstrates how an AI Bot called ‘Picasso’ is deployed at the Edge and retrained/fine-tuned at the Core and then deployed back to Edge using Nutanix Cloud Platform infrastructure services.

More Details

At the Edge of the continuum, we have a cluster that targets running LLMs as it’s important to ensure low inference latency. But, there are several challenges to running LLMs at the Edge. Retraining models with new incoming data is compute intensive and is often constrained due to limited resources at the Edge. Retraining models requires resource-intensive clusters with CPU/GPU cores, memory, and storage to fine-tune LLMs. This is where the use of properly sized instances for retraining in the Core data center or Public Cloud is a better fit.

Moving up the continuum, we have the Core, where other near-edge servers and gateways are placed, which are more powerful than Edge cluster(s) and run more complex machine learning models or process the fine-tuning and refining of the LLMs more efficiently. Further moving up the continuum, we have the Cloud, which provides virtually unlimited resources. Nutanix works with all major Public Cloud platforms such as AWS, Azure and GCP which can be used for scalable and cost-effective training and deployment of machine learning models. Through this hybrid and multi-cloud environment, Nutanix provides the flexibility and scalability to deploy machine learning models across multiple clouds or on-premises environments.

Finally, data generated at the Edge cluster(s) is stored in its local Nutanix object store. With Nutanix Objects replication turned on, all the data at the Edge becomes available at the Core or Cloud. As soon as the Objects replication event is notified, the ML model fine-tuning workflow gets automatically triggered in the KubeFlow Pipeline at the Core or Cloud, which then generates a new model and writes it to the object store on Core or Cloud. The new model is then replicated back to the Edge which gets automatically redeployed at the same endpoint at the Edge cluster(s).

Next Steps

- A deeper dive into the Nutanix Edge AI solution

- Find out more about running next generation AI workloads on Nutanix Cloud Platform (NCP)

- Find out more about how customers can train a transformer model on Nutanix Cloud Platform (NCP)

- Find out more about how the KServe inference platform works on Nutanix Cloud Platform (NCP)

Engineering Credits

Special thanks to an amazing AI/ML Engineering team at Nutanix to make Nutanix Cloud Platform(NCP) AI-ready for our customers.

Debojyoti Dutta, Johnu George, Rajat Ghosh, Ajay Nagar, Deepanker Gupta, Gavrish Prabhu, Datta Nimmaturi, Laura Jordana, Veer Kumar, Piush Sinha.